Stat Cafe - Raymond Wong

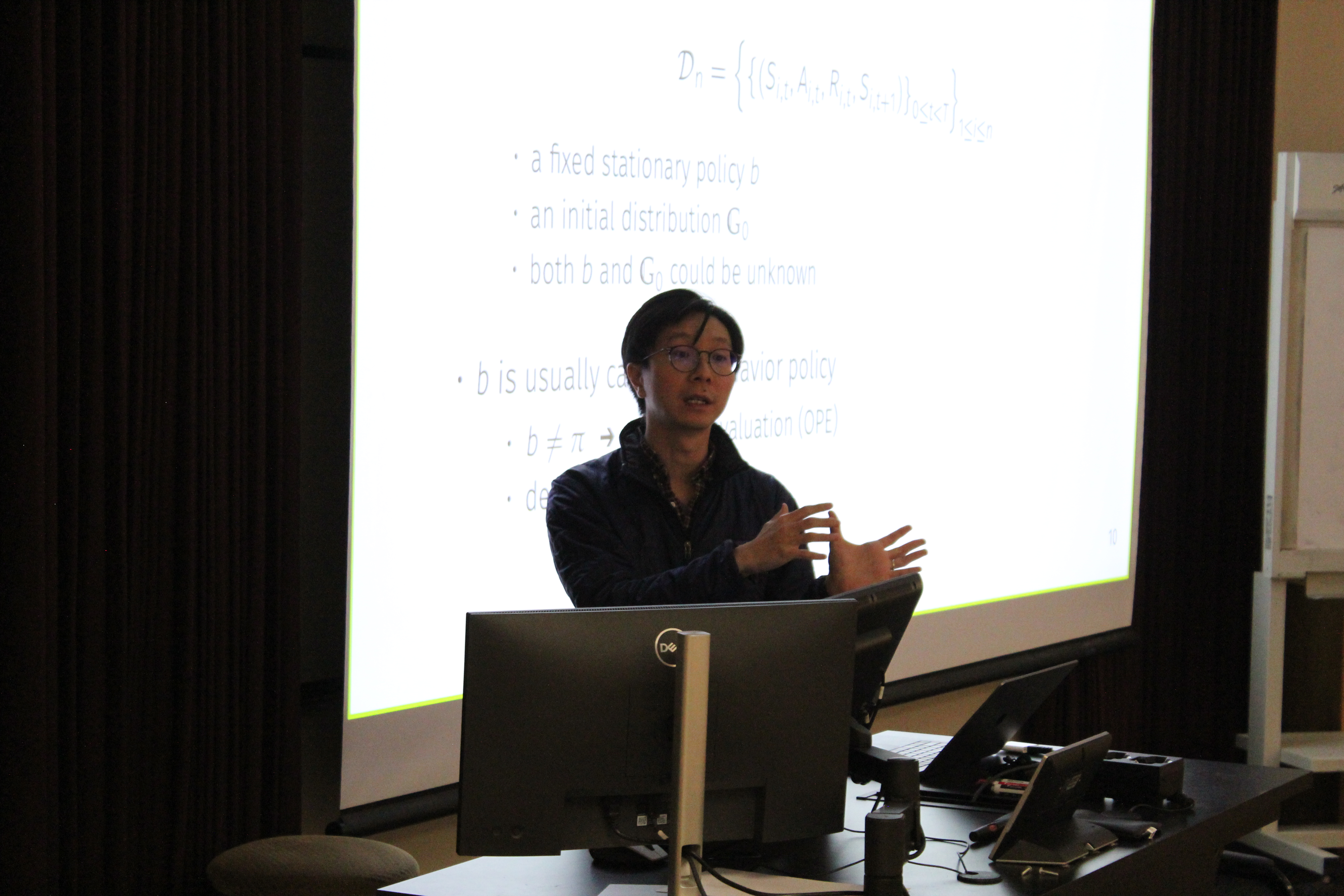

Balancing Weights for Offline Reinforcement Learning

- Time: Monday 2/17/2025 from 11:30 AM to 12:30 PM

- Location: BLOC 457

Description

Offline policy evaluation is considered a fundamental and challenging problem in reinforcement learning. In this talk, I will focus on the value estimation of a target policy based on pre-collected data generated from a possibly different policy, under the framework of infinite-horizon Markov decision processes. I will discuss a novel estimator with approximately projected state-action balancing weights for the policy value estimation. These weights are motivated by the marginal importance sampling method in reinforcement learning and the covariate balancing idea in causal inference. Corresponding asymptotic convergence will be presented. Our results scale with both the number of trajectories and the number of decision points at each trajectory. As such, consistency can still be achieved with a limited number of subjects when the number of decision points diverges.

Gallery (Photos by Samantha Williams)